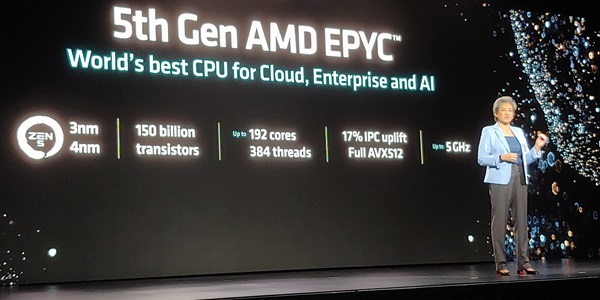

AMD CEO Lisa Su in San Francisco today

Today in San Francisco AMD announced updated versions of its chips portfolio and moved to position itself as an across-the-board provider of data center technology for organizations implementing Big AI. The company also said it is speeding up its product release cadence, a move clearly aimed at formidable sector heavyweight Nvidia.

“AMD is the only company that can deliver the full set of CPU, GPU and networking solutions to address all of the needs of the modern data center,” said AMD chair and CEO Lisa Su, adding that AMD sees the data center AI accelerator market growing to $500 billion by 2028. “And we have accelerated our roadmaps to deliver even more innovation across both our Instinct and EPYC portfolios while also working with an open ecosystem of other leaders to deliver industry-leading networking solutions.”

At a media pre-briefing yesterday at the Moscone Center, AMD released details on the new chips, including:

Instinct GPUs and Pensando DPU: Built on the AMD CDNA 3 architecture, AMD’s Instinct MI325X accelerators, the AMD Pensando Pollara 400 NIC and the AMD Pensando Salina DPU, deliver what AMD said is industry-leading memory capacity and bandwidth, with 256GB of HBM3E memory supporting 6.0TB/s, offering 1.8X more capacity and 1.3X more bandwidth than the Nvidia H200 GPU.

MI325X offers 1.3X greater peak theoretical FP16 and FP8 compute performance compared to H200, according to AMD. This results in up to 1.3X the inference performance on Mistral 7B at FP162, 1.2X inference performance on Llama 3.1 70B at FP83 and 1.4X the inference performance on Mixtral 8x7B at FP16 of the H200, said AMD, omitting comparison with Nvidia’s flagship Blackwell processors scheduled to be shipped the first half of 2025.

AMD Instinct MI325X

AMD said the MI325X accelerators are scheduled for production shipments in Q4 of this year and are expected to have system availability from platform providers, including Dell Technologies, Eviden, Gigabyte, Hewlett Packard` Enterprise, Lenovo and Supermicro, starting in Q1 2025.

AMD’s new Pensando programmable DPU, integrating processing with network interface hardware, targets hyperscalers for AI networking. It is split into two parts: the front-end, which delivers data and information to an AI cluster, and the backend, which manages data transfer between accelerators and clusters. The Pensando Salina is for the front-end and the Pensando Pollara 400, an Ultra Ethernet Consortium- (UEC) ready AI NIC, is for the back-end.

The Pensando Salina DPU supports 400G throughput and delivers up to 2X the performance, bandwidth and scale compared to the previous generation AMD product, the company said.

The Pensando Pollara 400, powered by the AMD P4 Programmable engine, is the industry’s first UEC-ready AI NIC, according to AMD. It supports RDMA software and is backed by an open ecosystem of networking.

AMD declined to release GPU pricing details.

On the software front, AMD announced updates to its ROCm software stack for generative AI training and inference. ROCm 6.2 supports FP8 datatype, Flash Attention 3 and Kernel Fusion and provides up to 2.4X performance on inference and 1.8X on training compared to the previous ROCm offering, the company said.

“AMD continues to deliver on our roadmap, offering customers the performance they need and the choice they want, to bring AI infrastructure, at scale, to market faster,” said Forrest Norrod, EVP-GM of AMD’s Data Center Solutions Business Group. “With the new AMD Instinct accelerators, EPYC processors and AMD Pensando networking engines, the continued growth of our open software ecosystem, and the ability to tie this all together into optimized AI infrastructure, AMD underscores the critical expertise to build and deploy world class AI solutions.”

AMD 5th Gen EPYC

EPYC 9005 Series CPUs: AMD also announced the availability of 5th Gen AMD EPYC processors, codenamed “Turin.” Using the “Zen 5” core architecture and compatible with the SP5 platform, the 3/4nm Turin core counts range from 8 to 192, the peak-core CPU delivering up to 2.7X the performance compared to “the competition,” AMD said.

The new EPYC portfolio includes is the 64-core AMD EPYC 9575F built for GPU-powered AI solutions that need maximized host CPU capabilities. “Boosting up to 5GHz5, compared to the 3.8GHz processor of the competition, it provides up to 28 percent faster processing needed to keep GPUs fed with data for demanding AI workloads,” AMD said.

AMD said its new “Zen 5” core architecture provides up to 17 percent better instructions per clock (IPC) for enterprise and cloud workloads and up to 37 percent higher IPC in AI and high performance computing (HPC) compared to “Zen 4.”

AMD said the 192-core EPYC 9965 CPU has up to 3.7X performance on end-to-end AI workloads, such as TPCx-AI (derivative), and for small and medium size enterprise-class generative AI models, such as Meta’s Llama 3.1-8B, EPYC 9965 provides 1.9X the throughput performance compared to the competition. Also, the EPYC 9575F AI host node can use its 5GHz max frequency boost to help a 1,000 node AI cluster drive up to 700,000 more inference tokens per second, AMD said.

The new EPYCs are available on servers from Cisco, Dell, Hewlett Packard Enterprise, Lenovo and Supermicro, as well as ODMs and cloud service providers.

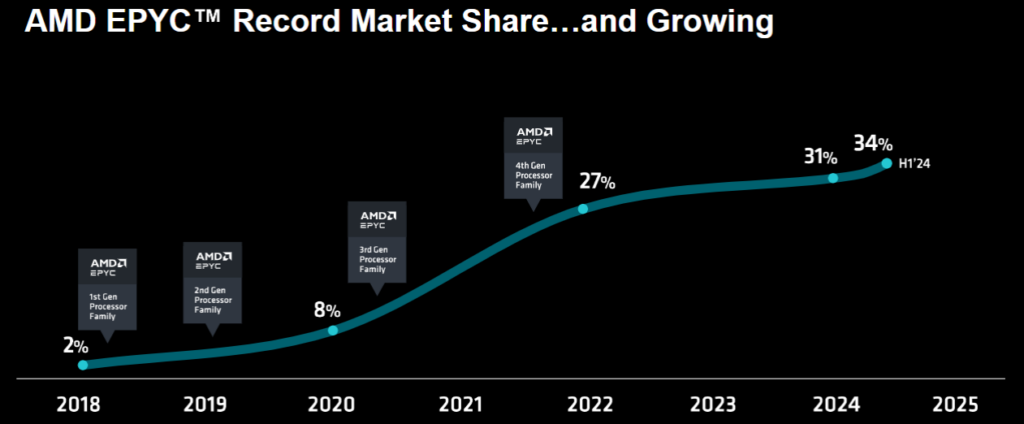

In its long rivalry with x86 CPU market leader Intel, AMD said it has continued to grab more market share, citing analyst firm Mercury Research reporting AMD with a 34 percent share.

credit: AMD

Copyright for syndicated content belongs to the linked Source link